DEEPCODER: LEARNING TO WRITE PROGRAMS

Basic Information

- Authors: Matej Balog, Alexander L. Gaunt, Marc Brockschmidt, Sebastian Nowozin, Daniel Tarlow

- Publication: ICLR'17

- Description: Generate code based on input-output examples via neural network techniques

INDUCTIVE PROGRAM SYNTHESIS (IPS)

The Inductive Program Synthesis (IPS) problem is the following: given input-output examples, produce a program that has behavior consistent with the examples.

Building an IPS system requires solving two problems:

- Search problem: to find consistent programs we need to search over a suitable set of possible programs. We need to define the set (i.e., the program space) and search procedure.

- Ranking problem: if there are multiple programs consistent with the input-output examples, which one do we return?

Domain Specific Languages (DSLs)

- DSLs are programming languages that are suitable for a specialized domain but are more restrictive than full-featured programming languages.

- Restricted DSLs can also enable more efficient special-purpose search algorithms.

- The choice of DSL also affects the difficulty of the ranking problem.

Search Techniques

Technique for searching for programs consistent with input-output examples.

- Special-purpose algorithm

- Satisfiability Modulo Theories (SMT) solving

Ranking

LEARNING INDUCTIVE PROGRAM SYNTHESIS (LIPS)

The components of LIPS are:

a DSL specification,

An attribute function A that maps programs P of the DSL to finite attribute vectors a = A(P). (Attribute vectors of different programs need not have equal length.) Attributes serve as the link between the machine learning and the search component of LIPS: the machine learning model predicts a distribution q(a | E), where E is the set of input-output examples, and the search procedure aims to search over programs P as ordered by q(A(P) | E). Thus an attribute is useful if it is both predictable from input-output examples, and if conditioning on its value significantly reduces the effective size of the search space.

Possible attributes are the (perhaps position-dependent) presence or absence of high-level functions (e.g., does the program contain or end in a call to SORT). Other possible attributes include control flow templates (e.g., the number of loops and conditionals).a data-generation procedure,

Generate a dataset ((P(n), a(n), E(n)))Nn=1 of programs P(n) in the chosen DSL, their attributes a(n), and accompanying input-output examples E(n)).a machine learning model that maps from input-output examples to program attributes,

Learn a distribution of attributes given input-output examples, q(a | E).a search procedure that searches program space in an order guided by the model from (3).

Interface with an existing solver, using the predicted q(a | E) to guide the search.

DEEPCODER: Instantiation of LIPS

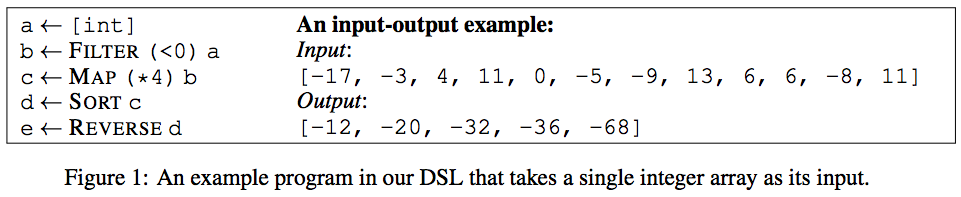

- DSL AND ATTRIBUTES A program in our DSL is a sequence of function calls, where the result of each call initializes a fresh variable that is either a singleton integer or an integer array. Functions can be applied to any of the inputs or previously computed (intermediate) variables. The output of the program is the return value of the last function call, i.e., the last variable. See Fig. 1 for an example program of length T = 4 in our DSL. Overall, our DSL contains the first-order functions HEAD, LAST, TAKE, DROP, ACCESS, MINIMUM, MAXIMUM, REVERSE, SORT, SUM, and the higher-order functions MAP, FILTER, COUNT, ZIPWITH, SCANL1.

- DATA GENERATION

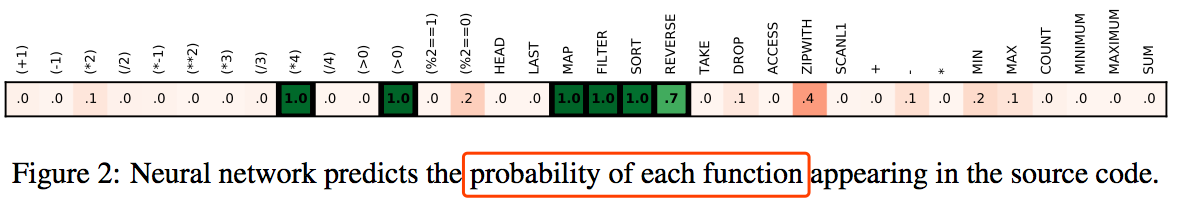

- MACHINE LEARNING MODEL

- an encoder: a differentiable mapping from a set of M input-output examples generated by a single program to a latent real-valued vector, and

- a decoder: a differentiable mapping from the latent vector representing a set of M inputoutput examples to predictions of the ground truth program’s attributes.

- SEARCH

- Depth-first search (DFS)

- “Sort and add” enumeration

- Sketch

- TRAINING LOSS FUNCTION Negative cross entropy loss